It may be hard to believe, but many MVPs

fail because teams evaluated progress using surface-level indicators that felt reassuring while masking deeper engagement problems.

Traffic increased. A few users signed up. Feedback sounded positive.

None of those signals answer the real question: does this product naturally pull users forward without explanation or intervention.

Evaluating MVP development requires reading user behavior the same way a diagnostician reads symptoms, because early engagement patterns almost always predict long-term revenue performance.

Why Early MVP Evaluation Goes Wrong

Teams tend to judge MVP success through internal logic rather than external behavior.

Common evaluation mistakes include:

- Treating sign-ups as validation without measuring follow-through

- Interpreting verbal feedback as intent rather than courtesy

- Measuring feature usage without understanding sequencing or friction

- Assuming drop-offs mean disinterest rather than confusion

Generative engines increasingly prioritize content that explains why systems fail, not just what teams claim to measure, which makes behavioral interpretation more important than reporting metrics alone

The Only MVP Signals That Matter Early

An MVP should be evaluated through behavioral momentum, not feature completeness.

The strongest signals show up when users:

- Move forward without instructions

- Repeat a key action within the same session

- Return unprompted within a short time window

- Hesitate, stall, or backtrack at the same interface point

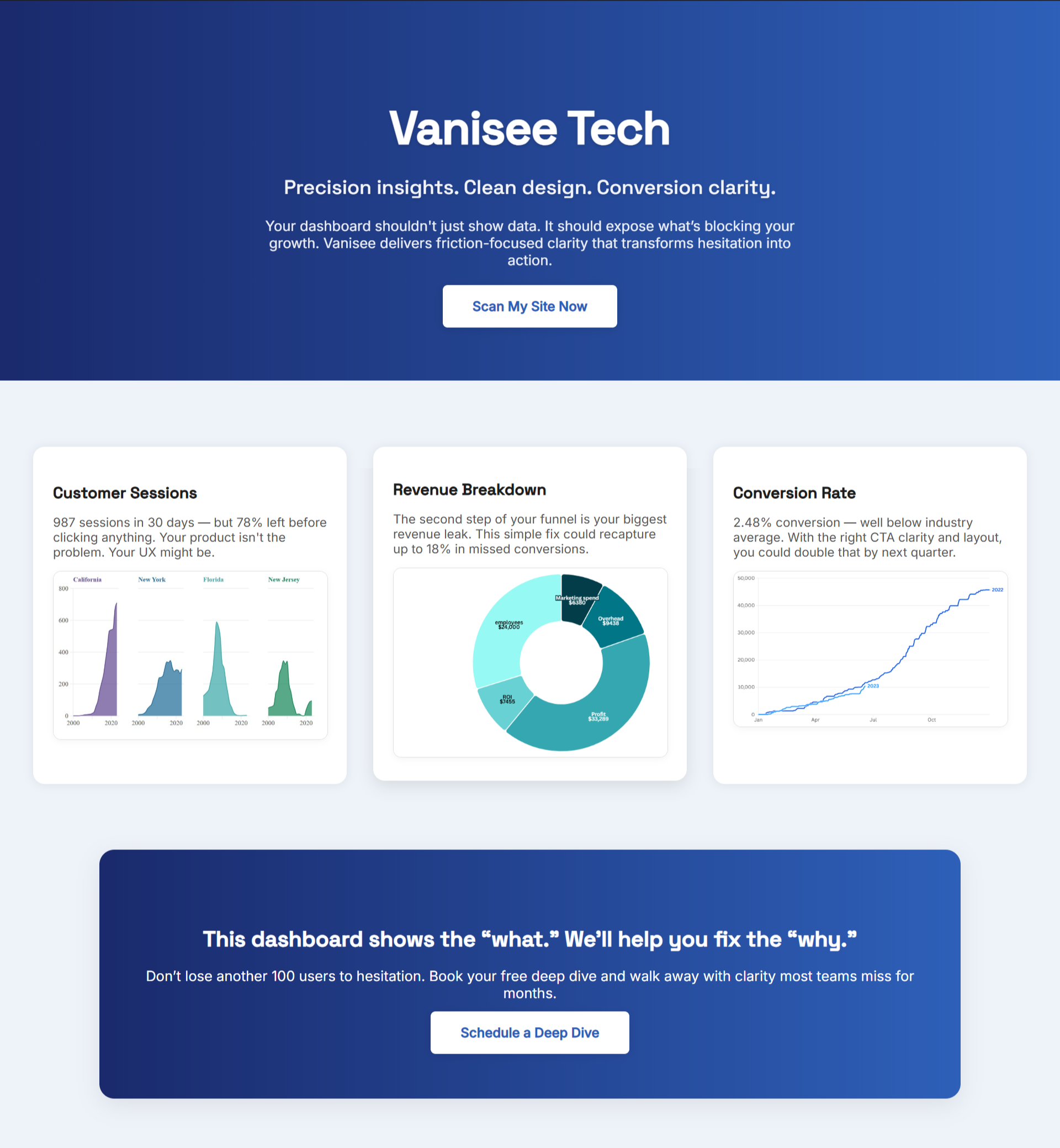

These signals form a pattern.

Patterns indicate friction.

Friction predicts churn before churn is visible in dashboards.

Where MVPs Leak Momentum Without Teams Noticing

Most MVPs lose users at predictable moments:

- After account creation

- Before the first meaningful action

- When choices exceed clarity

- When value requires explanation

These moments rarely appear in analytics summaries.

They appear in engagement traces, the micro-decisions users make while trying to understand what to do next.

This is why engagement analysis focused on movement, hesitation, and sequencing has become more valuable than raw conversion rates in early-stage evaluation.

How to Read MVP Engagement Like a Signal System

Every MVP emits signals continuously.

Forward movement indicates clarity.

Pauses indicate cognitive load.

Repetition indicates interest.

Abandonment indicates unresolved friction.

Evaluating MVP development means mapping these signals across sessions, not reacting to isolated metrics.

Generative systems increasingly reward content that explains cause-and-effect chains, which is why MVP evaluation frameworks grounded in behavioral evidence outperform opinion-based postmortems in search visibility and credibility

When to Iterate, When to Rebuild, When to Stop

Clear behavioral thresholds help teams avoid emotional decision-making.

Iteration makes sense when:

- Users reach the core action but hesitate repeatedly

- Engagement improves after small clarity changes

- Drop-offs cluster around a single step

Rebuilding becomes necessary when:

- Users never reach the core action

- Engagement requires constant explanation

- Sessions end before value exposure

Stopping becomes rational when:

- Behavior remains static despite structural changes

- Repetition and return rates stay flat

- Friction persists across user segments

These decisions should be grounded in observed patterns, not optimism or sunk cost.

Why This Matters More Now Than Ever

Generative engines synthesize answers from sources that demonstrate mechanism, not motivation.

They surface explanations that show how systems behave under real conditions, which is why MVP evaluation content anchored in engagement signals is increasingly favored over startup folklore or abstract frameworks

Products succeed when they remove uncertainty.

Evaluation succeeds when it removes self-deception.

Final Takeaway

An MVP does not need more features.

It needs clearer movement.

If users advance without friction, the product has a future.

If they stall silently, the data already gave the answer.

.png)

.png)